Before diving into the reasons why end-to-end (E2E) testing struggles in large-scale microservice architectures, let’s recap some key traits of microservice architecture that are critical from a testing perspective:

- Independent Deployments: In a microservices environment, synchronization points are avoided. Teams should be able to deploy independently without waiting on others, especially other teams. There should be no external rules, like a code freeze, that prevent a team from deploying.

- Team Autonomy: Teams own their respective services and have minimal communication with other teams. Synchronization at the team level is also avoided.

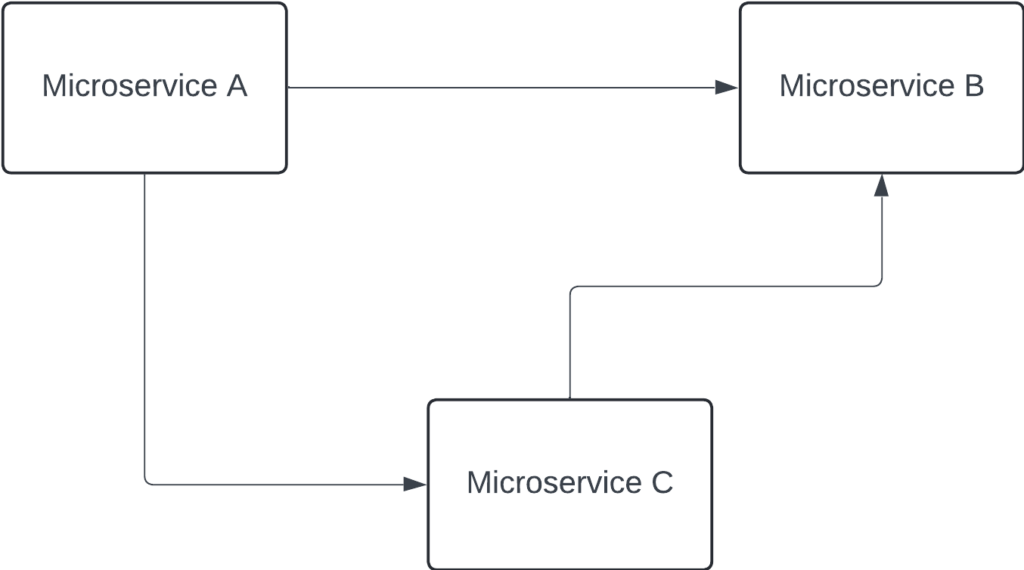

For the sake of simplicity, let’s assume your application consists of three microservices. Consider the following communication graph:

Examining this diagram, we can see that the microservices are interconnected, meaning any change to one microservice necessitates validating the functionality of all the others in E2E tests. Let’s analyze a couple of scenarios to understand how E2E tests operate in a small microservice environment:

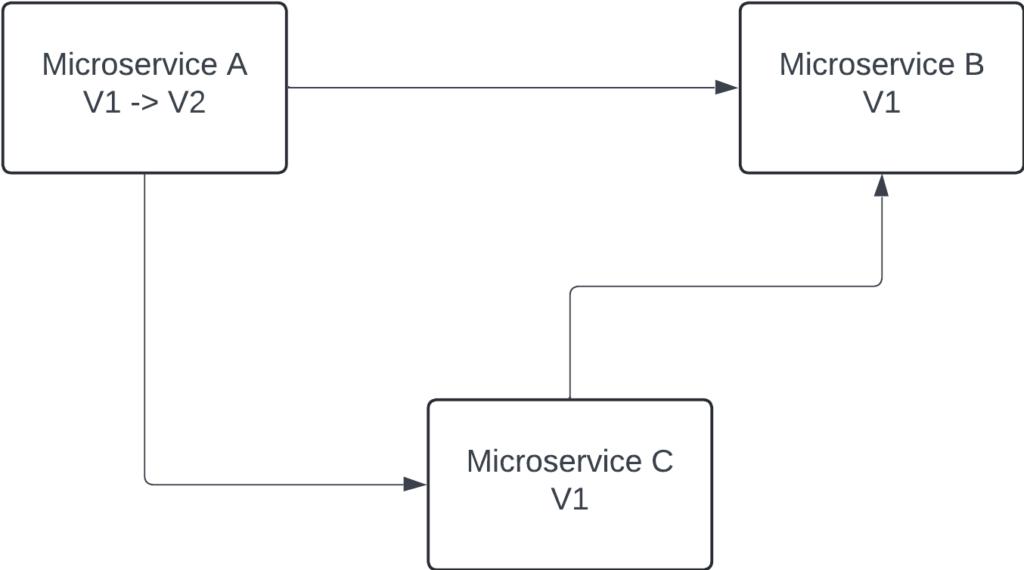

Imagine a system running in production, where all microservices are currently at version V1. Now, suppose Team A wants to deploy a new version of their application—V2.

On which version of the other microservices should we run our E2E tests?

| Microservice A | Microservice B | Microservice C |

| V2 | V1 | V1 |

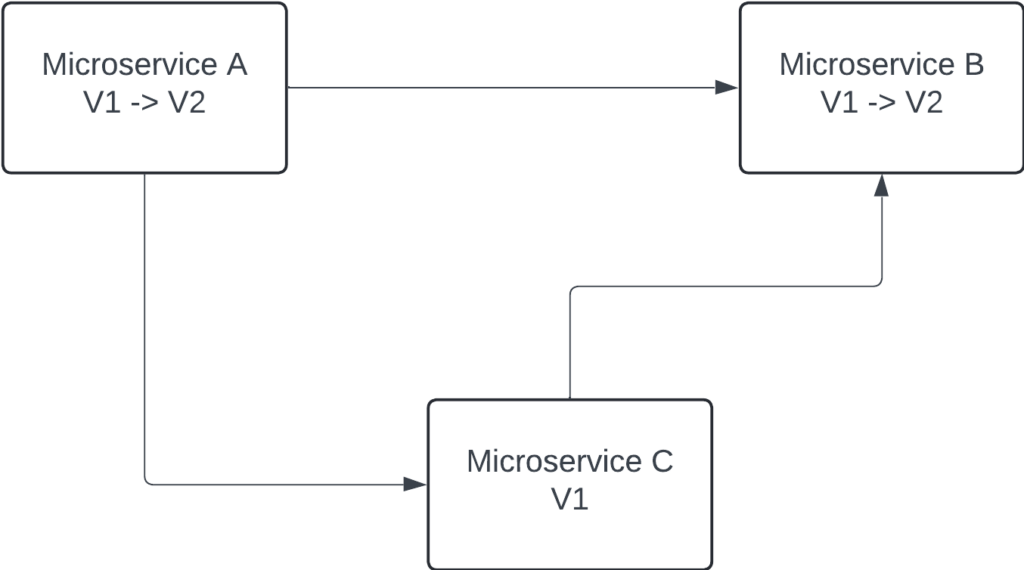

Now, let’s assume that Team B also wants to upgrade their microservice to version 2. They can proceed without hesitation because a fundamental principle of microservices is that there should be no synchronization points between deployments.

On which version of the other microservices should we run our E2E tests?

| Microservice A | Microservice B | Microservice C |

| V2 | V2 | V1 |

| V1 | V2 | V1 |

Why do we now have two possibilities? Because the deployment of microservice B might complete faster than that of microservice A. Additionally, Team A might choose to roll back their microservice to version 1 for various reasons, such as poor performance or a bug in the code. With frequent rollbacks and deployments, how can we be sure that we’re testing the intended version? How will we know that the configuration didn’t change during our E2E test, even with a rolling update strategy in place?

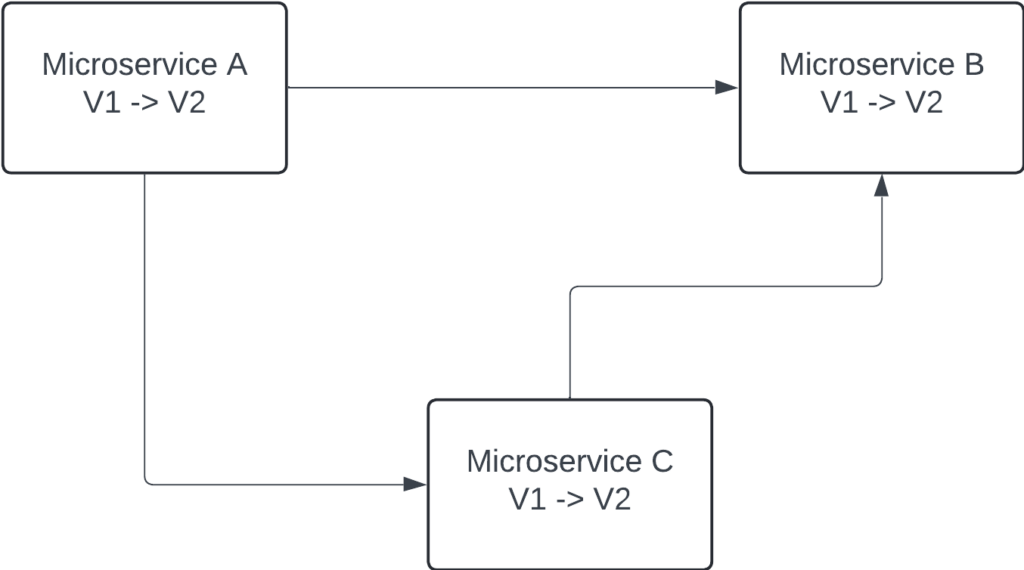

Now, let’s consider that Team C also wants to upgrade their microservice to version 2 simultaneously. How many possible combinations do we have now?

| Microservice A | Microservice B | Microservice C |

| V2 | V1 | V1 |

| V1 | V2 | V1 |

| V1 | V1 | V2 |

| V2 | V2 | V1 |

| V2 | V1 | V2 |

| V1 | V2 | V2 |

| V2 | V2 | V2 |

Assuming these three deployments happen simultaneously (which is entirely possible), we would need at least seven testing environments to ensure our application works correctly in every scenario. That’s seven separate environments just to cover E2E testing for an architecture with only three microservices! Now, imagine the complexity if you had 10, 20, 50, or even 100 microservices. The number of required testing environments would skyrocket, making E2E testing increasingly impractical and unmanageable.

Summary

Some might argue that this situation is unlikely in their project because it’s rare for teams to deploy simultaneously. I often hear this from projects claiming to have a microservice architecture but failing to adhere to its fundamental principles. As Wikipedia defines it:

In software engineering, a microservice architecture is an architectural pattern that arranges an application as a collection of loosely coupled, fine-grained services, communicating through lightweight protocols. One of its goals is to enable teams to develop and deploy their services independently. This is achieved by reducing several dependencies in the codebase, allowing developers to evolve their services with limited restrictions, and hiding additional complexity from users

Another argument I often hear is that teams don’t merge code frequently, leading to less frequent deployments. While this might be true in less mature organizations, it highlights a lack of Continuous Integration (CI). True CI means merging changes continuously throughout the day, not just after everything is completed. Mature IT companies encourage developers to merge multiple times daily to fully reap the benefits of Continuous Integration.

I have previously worked on a large monolith-to-microservices refactoring project, and agree with this article; the old end-to-end test suite for the monolith simply no longer made sense with services being released on independent schedules. While the issue of “which versions shall I test” (as described above) is an important one, there are other problems – for example in true microservice systems where each service has its own datastore, state for tests now needs to be present in multiple databases rather than just one.

In the project I mentioned above, we moved to testing each component independently, plus tools to catch incompatible API changes before release. In addition, it is useful to have support for incremental rollouts where a new version of a service can be exposed only to test-users, then internal users, before starting to handle “real” production traffic. E2E tests do have some purpose when run regularly against _production environments_ in order to generate alerts for issues before real users encounter them. And above all, the ability to do effective and rapid rollbacks is important.