In the dynamic world of software development, ensuring the reliability and performance of applications is paramount. Testing software applications is a critical phase that bridges the gap between development and deployment, safeguarding the user experience by identifying and rectifying defects. From automated tests that accelerate feedback cycles to comprehensive manual evaluations that capture intricate user interactions, the testing landscape is as diverse as it is essential. In this blog, we delve into the multifaceted realm of software testing, exploring its methodologies, tools, and best practices that empower developers to deliver robust, high-quality software. Join us as we uncover the nuances of this vital discipline, pivotal in achieving seamless and efficient software solutions.

You’ve likely encountered various representations of testing levels across numerous websites. While the core concepts remain consistent, the naming conventions often vary. This lack of standardization within the IT industry necessitates a brief explanation of the testing levels depicted in the below diagram.

As illustrated in the diagram above, we can differentiate the test levels based on several key characteristics, such as:

- The time required to receive feedback from the results

- The fragility of the tests

- The development effort needed to create them

- The granularity at which they operate

- The cost associated with running them

- Whether they can be run in a developer’s local environment

To evaluate these characteristics, this article will use a rating scale from 1 to 5, where 1 indicates a low factor and 5 indicates a high factor.

Unit testing

- Feedback time: Fast

- Fragility: Low

- Development effort: Low

- Granularity: Aggregates/Template Service’s level

- Cost: None

- Local environment friendly: yes

Unit testing stands as the most crucial level within the hierarchy of testing levels. The primary objective of unit tests is to ensure that the internal logic of the application functions correctly. These tests focus on verifying the coherence and correctness of individual units of code, without delving into the interactions between different components or aggregates.

However, it is important to note that testing every file in the application is generally inadvisable. Such an approach can lead to a tight coupling between the application’s internal logic and the tests, making maintenance more challenging. Instead, the focus should be on testing the abstractions of services. Avoid checking internal calls, method invocations, or the internal state of objects. You should strive to check a contract of your aggregates/service templates. It is important to note that these tests must be able to run without an internet connection.

For unit testing, simple Java combined with a testing framework is often sufficient. In more complex scenarios, tools like Mockito can be highly beneficial.

The level of testing does not significantly differ whether you are using cloud services or not. However, if you need to unit test a service written for a proprietary cloud service (e.g., AWS Step Functions) that cannot be containerized, ensure that there is a reliable and well-documented method for performing these tests.

By understanding and appropriately implementing unit tests, developers can significantly enhance the reliability and maintainability of their applications. Unit testing should be a fundament in the testing pyramid.

Integration testing

- Feedback time: Relatively fast (slower then unit tests)

- Fragility: Low

- Development effort: Low/Medium

- Granularity: Interaction between components

- Cost: None

- Local environment friendly: yes

Integration testing focuses on verifying the interactions between different services within an application. Modern applications rarely operate as isolated components; they typically need to interact with various external systems to function effectively. These interactions can include:

- Database Integration: Saving and retrieving the application’s state.

- Communication with Other Services: Both synchronous and asynchronous calls to external services.

- Infrastructure Components: Integrations with event brokers, message queues, and other middleware.

Each of these interactions involves dependencies that must be thoroughly tested to ensure the application works correctly in its intended environment. These dependencies are often referred to as application-dependent components. It is important to note that these tests must be able to run without an internet connection.

The complexity of implementing integration tests can vary significantly depending on the project’s circumstances, among others, do we have a Vendor Lock-In.

No Vendor Lock-In

If the application does not rely on proprietary or inaccessible services, integration testing is relatively straightforward. Open-source Docker images can be used to simulate dependent services locally. For instance, in Java projects, Testcontainers can facilitate running these Docker images during the testing phase.

Vendor Lock-In

For applications heavily reliant on proprietary services that are not publicly accessible, integration testing becomes more challenging. Here are some strategies to mitigate this issue:

1) Check for Published Docker Images

Some service providers offer Docker images for their dependent application services (e.g., DynamoDB).

2) Search for compatibility with Public Docker Images

Verify if the service provider’s offerings are compatible with any other public Docker images. For example, AWS RDS Aurora uses PostgreSQL or MySQL, and AWS DocumentDB is largely compatible with MongoDB.

3) Utilize LocalStack

LocalStack allows developers to emulate AWS services locally. However, it’s important to be aware of its limitations:

- The desired service’s API might not be implemented, especially for newer services.

- The API coverage might be incomplete, potentially missing edge cases.

- Some services may require a paid version of LocalStack, which can be costly.

- The local API might contain bugs, impacting the reliability of tests.

By considering these factors and employing the appropriate tools and strategies, developers can effectively implement integration tests to ensure that their applications work seamlessly with all dependent services.

4) Stubbing external dependencies in integration tests

In scenarios where traditional approaches are not feasible, another effective strategy is to create your stubs of services being used. Typically, most services utilize a small subset (1-5%) of the full API operations available in AWS services. This means that internal teams can develop these limited portions of the API as WireMock stubs. While this approach offers the advantage of controlling exposed operations, it also demands significant development effort, which is why it is often considered a last resort option in integration testing.

Integration testing is a critical aspect of ensuring the reliability and robustness of applications, particularly in complex environments with numerous dependencies. By employing strategies such as using Docker images, leveraging tools like LocalStack and AWS SAM CLI, and creating mock services, developers can effectively test their applications’ integrations. This approach not only enhances the application’s stability but also ensures that all components work seamlessly together.

More on integration testing in AWS can be found here.

Contract tests

- Feedback time: Fast (slower then unit tests)

- Fragility: Low

- Development effort: Low

- Granularity: Interaction between components

- Cost: None

- Local environment friendly: yes

There are two main types of contract testing:

- Consumer-Driven Contract Testing: This approach involves the consumer of a service defining the expectations for the provider’s behaviour. The consumer creates the contract, which the provider then implements. This method ensures that the service meets the consumer’s needs.

- Producer-Driven Contract Testing: In this approach, the service provider defines the contract, outlining the expected inputs and outputs. The consumers then implement their services based on these specifications. This method helps maintain consistency and reliability across different services.

Contract tests not only validate these agreements but also facilitate the automatic generation of server stubs. These stubs can be used by consumers to test their own contracts, ensuring seamless integration and reducing the risk of deployment issues.

Useful Tools: Spring Cloud Contract, Pact

By incorporating contract testing into your development process, you can significantly enhance the stability and reliability of your applications in an SOA environment. These tests help ensure that each service interaction is consistent and reliable, reducing the likelihood of unexpected issues during deployment. The effort for creating those tests can be classified as low since we are able to generate a lot of code just by writing simple DSL scripts.

Acceptance tests

- Feedback time: Medium (significantly slower then integration tests)

- Fragility: Low

- Development effort: Medium

- Granularity: Application configuration, Main business flows

- Cost: Negligible

- Local environment friendly: no

Acceptance tests represent the first level of testing performed on a live environment, typically within a development setting. The primary objective of these tests is to verify the success of a deployment by ensuring that the application functions as expected in its intended environment. This includes checking database connectivity, API functionality, and overall system configuration within a specific domain.

Key Aspects of Acceptance Testing

- Deployment Verification: Acceptance tests confirm whether the deployment process was executed correctly. This involves validating the application’s ability to connect to databases, interact with APIs, and perform essential operations within its configured environment.

- Domain-Specific Testing: These tests focus on a single business domain, ensuring that the application meets its specified requirements. It is crucial to avoid testing across multiple domains to prevent dependency issues and ensure accurate feedback.

- Isolation from Other Teams: Acceptance tests should be isolated from other team components to avoid external influences that could skew the test results. Each team’s domain should be independently validated to ensure precise and reliable outcomes.

Useful Tools: Cucumber, TestNG, Newman

By incorporating acceptance tests into your development workflow, you can promptly identify and address deployment issues, ensuring that your application is correctly configured and operational within its designated domain. This level of testing is essential for maintaining the integrity and functionality of your software in a live environment.

E2E tests

- Feedback time: Slow

- Fragility: High

- Development effort: High

- Granularity: Main business flows

- Cost: Significant

- Local environment friendly: no

While End-to-End (E2E) tests have their place in the testing ecosystem, it is essential to recognize their specific use cases and inherent limitations. E2E tests are invaluable for validating the primary business scenarios of an application, ensuring that the entire workflow functions correctly from start to finish. However, they should not be relied upon for testing edge cases, as these tests are often time-consuming and prone to fragility.

Key Considerations for E2E Testing

- Scope and Focus: E2E tests should be limited to verifying the main business scenarios of the application. This includes essential user interactions and critical workflows that must function seamlessly. For instance, in a banking application, an E2E test might validate the process of making a payment from the frontend interface through to the backend processing.

- Frontend and Backend Testing:

- Frontend E2E Testing: This involves simulating user interactions with the application’s UI to ensure that key scenarios are executed correctly. Tools like Playwright can be used to automate these UI interactions.

- Backend E2E Testing: This focuses on verifying the application’s functionality via its APIs, ensuring that backend processes perform as expected. Cucumber can be employed to write and run these tests, simulating real-world API usage.

- Fragility and Debugging: E2E tests are often fragile and may not always provide clear insights into system failures. When an E2E test fails, it usually requires deeper investigation to identify the root cause. Additionally, E2E tests can yield false positives or true negatives, leading to potential misinterpretations of the system’s health.

- Complementary Role: E2E tests should not be the sole method of system validation. They are best used as a final safety check to confirm that primary business scenarios are functioning end-to-end. Relying exclusively on E2E tests can be risky due to their susceptibility to errors and extended execution times.

Useful Tools: Cucumber, Playwright

Personally, I’m not a fan of E2E tests because they are resource-intensive, slow, and often unreliable. In microservices, we should aim to minimize or even eliminate E2E tests (see ‘Why E2E testing will never work in Microservice Architectures‘), though this isn’t always feasible.

Chaos & Performance tests, Synthetic monitoring

- Feedback time: Slow

- Fragility: High

- Development effort: High

- Granularity: Infrastructure

- Cost: Medium

- Local environment friendly: no

In addition to traditional testing methodologies, advanced testing strategies such as Chaos Testing, Synthetic Monitoring, and Performance Testing play a crucial role in ensuring the robustness and reliability of modern applications. These tests should be executed on a scheduled basis to continuously validate the application’s performance and resilience under various conditions. Let’s explore these testing strategies in more detail:

Chaos Testing

Chaos Testing focuses on the infrastructure’s resilience and fault tolerance. The objective is to identify potential weaknesses by simulating disruptive events and observing how the system responds. Key scenarios include:

- Application Failures: Testing the impact of one or more applications going offline.

- Network Partitions: Simulating network interruptions to evaluate how the system handles connectivity issues.

- Load Balancer Failures: Assessing the system’s response when the load balancer fails to route traffic.

By intentionally introducing chaos, organizations can uncover hidden vulnerabilities and improve their infrastructure’s ability to withstand unexpected disruptions.

Useful tools: ChaosMonkey, ChaosMesh, Gremlin, AWS FIS

Synthetic Monitoring

Synthetic Monitoring involves the continuous and automated verification of services’ responsiveness. Unlike traditional monitoring, which relies on real user interactions, synthetic monitoring simulates user queries from various locations around the globe. Key aspects include:

- Global Reach: Performing checks from multiple geographic locations to ensure consistent performance.

- Aggregated Metrics: Collecting and aggregating the results into a single, comprehensive metric to monitor service health.

Synthetic Monitoring is a proactive approach that helps identify potential issues before they affect real users, making it an essential component of a comprehensive testing strategy.

Useful Tools: New Relic/Splunk/Datadog/Pingdom

Performance Testing

Performance Testing evaluates the application’s ability to handle unusual or peak loads. It is designed to identify performance bottlenecks and ensure that the application can scale effectively under stress. Key objectives include:

- Load Handling: Assessing how the application performs under heavy traffic conditions.

- Stress Testing: Pushing the application to its limits to observe its behavior under extreme conditions.

- Scalability Testing: Ensuring that the application can scale up or down based on demand.

By simulating high-traffic scenarios, performance testing helps ensure that the application remains responsive and reliable even during peak usage periods.

Incorporating Chaos Testing, Synthetic Monitoring, and Performance Testing into your testing strategy provides a holistic approach to ensuring application reliability and performance. These advanced tests complement traditional methodologies, offering deeper insights into the system’s resilience, stability, and scalability.

Useful Tools: JMeter or Gatling for Performance Testing

By systematically implementing these tests, organizations can proactively identify and mitigate potential issues, ensuring their applications are robust, reliable, and ready to handle real-world challenges.

CI/CD testing implementation

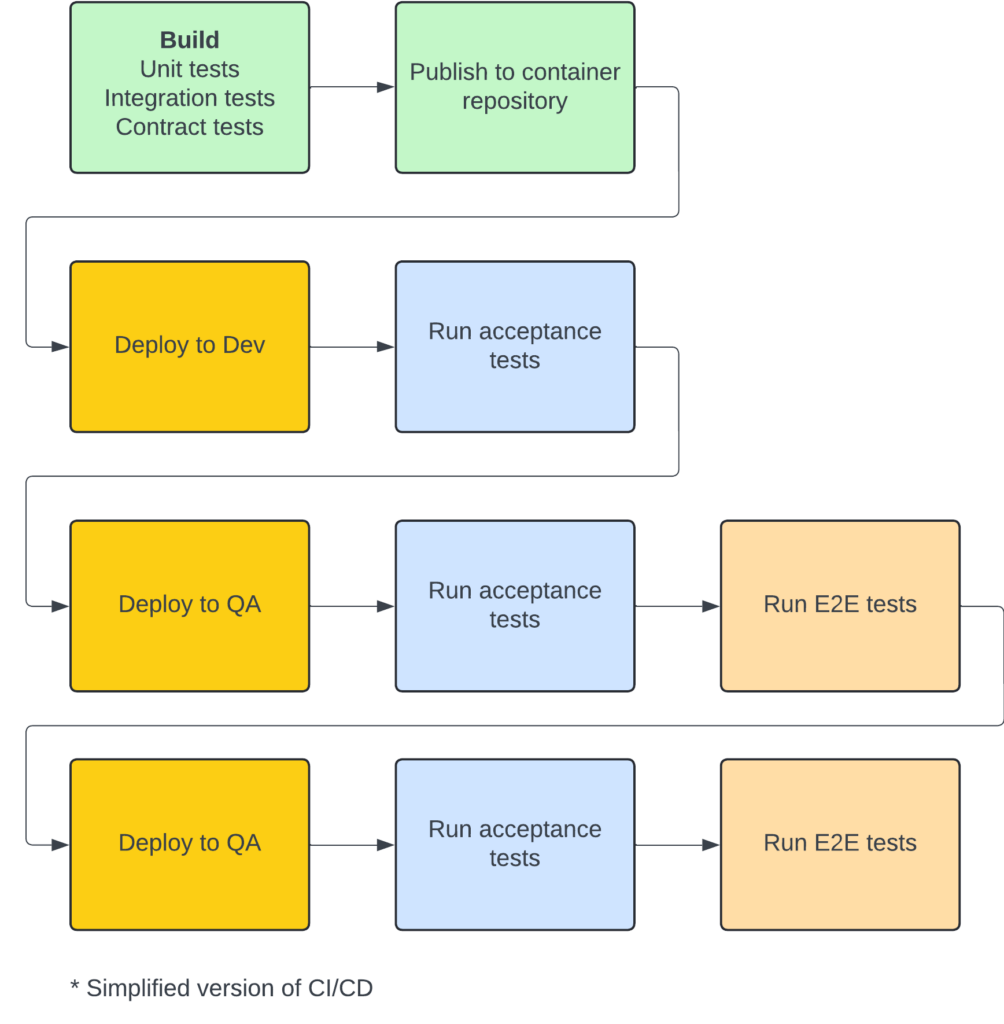

Since we right now have a broad knowledge of what are these steps doing, we can inspect how they should fit into the CI/CD diagram

An intriguing aspect of software development is the strategic omission of End-to-End (E2E) testing within the development environment. By its very nature, the development environment is inherently unstable, making it unreliable for testing services outside our immediate business domain. The first truly stable environment, capable of consistent performance under all circumstances, is typically the subsequent staging or production environment.

Development Environments in Testing

- Unstable Development Environment: Due to frequent changes and updates, the development environment should not be used for E2E testing. Relying on it for such tests can lead to inconsistent and unreliable results.

- Stable Environment: The first stable environment, often the staging environment, is where E2E tests should be executed. This environment mirrors production closely and provides a reliable setting for comprehensive testing.

Comprehensive Testing Across All Levels

Despite the increasing reliance on proprietary and commercial solutions, it remains essential to thoroughly test all components at every level of the testing pyramid. This holistic approach ensures the reliability and functionality of the entire application. Although developers may encounter various challenges during the development process, a structured testing strategy and the right tools can effectively manage and overcome these obstacles.

Summary Testing traits

To conclude, in article I have presented all testing levels we usually have in our applications. Although they check different aspects, focus on different components they all should share following (the most important) traits:

- Stability – test may never be fragile. It’s important that no matter how many times tests are run, if there was no code change between them, the tests results should be exactly the same. Failing in achieving this trait will result in distrust towards them and effectively ignoring them.

- Independence – tests should be self containing. Test’s inputs should never rely on other test outputs

- Objectivity – Tests should be conducted without bias, focusing solely on whether the software meets the requirements

- Efficiency – Tests should aim to detect the most significant defects using the least amount of resources, including time, effort, and tools